API Advice

API Experts - APIs to Fight Fraud

Nolan Sullivan

September 20, 2022

TL;DR

- Tech writing teams are often papering over the gaps created by immature API development processes.

- As businesses grow, the complexity of managing even simple API changes grows exponentially.

- Designing an API is no different from building any product. The question of what framework you use should be dictated by the requirements of your users.

- Don’t build an API just to have one. Build it when you have customer use cases where an API is best suited to accomplishing the user’s goal.

- Good devex is when your services map closely to the intention of the majority of your users.

- The mark of good devEx is when you don't have to rely on documentation to achieve most of what you're trying to do.

Introduction

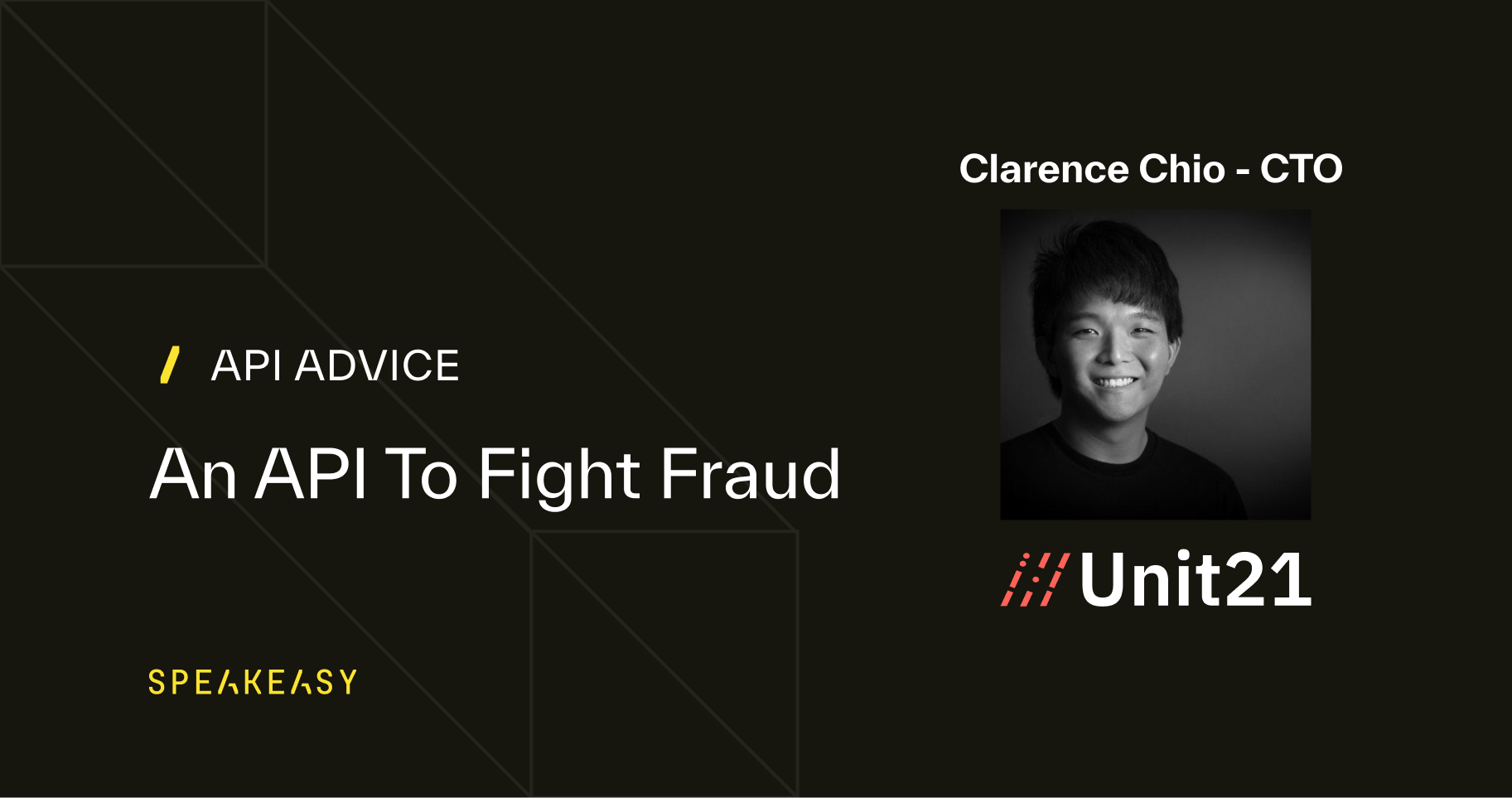

Clarence Chio (opens in a new tab), is the CTO and one of the co-founders of Unit21. Unit21 (opens in a new tab) is a no-code platform that helps companies fight transaction fraud. Since the company started in 2018 it has monitored over $250 billion worth of transactions, and been responsible for preventing over $1B worth of fraud and money laundering. In addition to his work on Unit21, Clarence is a Machine Learning lecturer at UC Berkeley and the author of “Machine Learning & Security” (opens in a new tab)

API Development @ Unit21

What does API development look like at Unit21? Who is responsible for releasing APIs publicly?

API development is a distributed responsibility across the different product teams. Product teams build customer-facing features which take the form of dashboards in the web interface, API endpoints, or both. So generally, every product team maintains their own sets of API endpoints defined by the scope of the product vertical that they're working on.

But the people that make the decisions defining best practices and the consistency of how our APIs are designed is typically the tech leads working on our data ingestion team. As for who maintains the consistency of how APIs are documented and presented to our customers, that is our technical writing team.

How come the data ingestion team owns API architecture is that just a quirk of Unit21 engineering? Is there any resulting friction for API development in other parts of the engineering organization?

Yeah, the reason for this is pretty circumstantial. Most of the API usage that customers engage with are the APIs owned by our data ingestion team. We’re a startup and we don’t want too much process overhead, therefore we just let the team that owns the APIs with the most engagement, maintain the consistency and define what the API experience should be.

As for friction with other parts of the org, it's largely been okay. I think the places of friction aren’t specifically related to having designated a specific product team to be the owner. A lot of the friction we’ve had was down to lack of communication or immature processes.

A common example is when the conventions for specific access patterns, or query parameter definitions aren’t known across the org. And if the review process doesn't catch that, then friction for the user happens. And then we have to go back and clean things up. And we have to handle changing customer behavior, which is never fun. However, it's fairly rare and after a few incidents of this happening, we’ve started to nail down the process..

What is Unit21’s process to ensure that publicly released APIs are consistent?

There is a strenuous review process for any customer facing change, whether it's on the APIs or the dashboards in the web app. Our customers rely on us to help them detect and manage fraud so our product has to be consistent and reliable.

The API review process has a couple of different layers. First we go through a technical or architectural review depending on how big the changes; this happens before any work is done to change the API definitions.. The second review is a standard PR review process after the feature or change has been developed. Then there’s a release and client communication process that's handled by the assigned product manager and our technical writing team. They work with the customer success team to make sure that every customer is ready if there’s a breaking change.

If I were to ask a developer building public APIs at Unit21 what their biggest challenge was, what do you think they would say?

It's probably the breadth of coverage that is growing over time. Because of the variance in the types of things that our customers are doing through our API and all the other systems and ecosystems that our customers operate in, every time we add a new API the interactions between that API and all the other systems can increase exponentially.

So now the number of things that will be affected if, for example, we added a new field in the data ingestion API is dizzying. A new field would affect anything that involves pulling this object type. Customers expect to be able to pull it from a web hook from an export, from a management interface, from any kind of QA interface, they want to filter by it, and be able to search by it. All those things should be doable. And so the changing of APIs that customers interact with frequently cause exponentially increasing complexity for our developers.

What are some of the goals that you have for Unit21’s API teams?

There's a couple of things that we really want to get better at. And by this, I don’t mean a gradual improvement on our current trajectory, but a transformational change. Because the way we have been doing certain things hasn't been working as well as I’d like. The first is the consistency of documentation.

When we first started building our API's out, we looked at all the different types of spec frameworks, whether it was Swagger or OpenAPI, and we realized that the level of complexity that would be required if we wanted to automate API doc generation support would be too much ongoing effort to be worthwhile. It was a very clear answer. But as we continue to increase the scope of what we need to document and keep consistent, we realized that now the answer is not so clear.

Right now this issue is being covered over by our technical writing team working very, very closely with developers. And the only reason this work is because our tech writer is super overqualified. She's really a rock star that could be a developer if she wanted to. And we need her to be that good because we don't have a central standardized API definition interface; everything is still defined as flask routes in a Python application. She troubleshoots and checks for consistency at the time of documentation because that’s where you can never hide any kind of inconsistency.

But this isn’t the long term solution, we want to free up our tech writers for differentiated work and fix this problem at the root. We’re still looking into how to do this. We’re not completely sold on Swagger or OpenAPI, but if there are other types of interfaces for us to standardize our API definition process through, then potentially, we could find a good balance between achieving consistency in how our APIs are defined & documented and the efforts required from our engineering team. But yeah, the biggest goal is for more documentation consistency.

API architecture Decisions

When did you begin offering APIs to your clients, and how did you know it was the right time?

When we first started, we did not offer public APIs. We first started, when we realized that a lot of our customers were evolving use cases that needed a different mode of response from our systems. Originally, we would only be able to tell them that this transaction is potentially fraudulent at the time of their CSV, or JSON file being uploaded into our web UI, and by then the transaction would have been processed already. In many use cases this was okay, but then increasingly, many of our customers wanted to use us for real time use cases.

We developed our first set of APIs so that we could give the customer near real time feedback on their transactions, so that they could then act on the bad event and block the user, or block the transaction, etc. That was the biggest use case that pushed us towards the public API road. Of course, there's also a bunch of other problems with processing batch files. Files are brittle, files can change, validation is always a friction point. And the cost of IO for large files is a big performance consideration.

Now we’re at the point where more than 90% of our customers use our public APIs. But a lot of our customers are a hybrid; meaning they are not exclusively using APIs. Customers that use APIs also upload files to supplement with more information. And we also have customers that are using our APIs to upload batch files; that's a pretty common thing for people to do if they don't need real time feedback.

Have you achieved, and do you maintain parity between your web app and your API suite?

We don't. We actually maintain a separate set of APIs for our private interfaces and our public interface. Many of them call the same underlying back end logic. But for cleanliness, for security, and for just a logical separation, we maintain them separately, so there is no parody between them in a technical sense.

That’s a non-standard architecture-choice, would you recommend this approach to others?

I think it really depends on the kind of application that you're building. If I were building something where people were equally likely to interact through the web as through the API, then I think I would definitely recommend not choosing this divergence.

Ultimately, what I would recommend heavily depends on security requirements. At Unit21 we very deliberately separate the interfaces, the routes, the paths between what is data ingestion versus what is data exfiltration. That gives us a much better logical separation of what kinds of API routes we want to put into a private versus public subnet, and what exists and just fundamentally does not exist as a route in a more theoretically exposed environment. So ultimately, it's quite circumstantial.

For us, I would make the same decision today to keep things separate. Unless there existed a radically different type of approach to API security. I mentioned earlier there were a couple of things that we would like to do differently. One of them is something along this route. We are starting to adopt API gateways and using tools like Kong to to give us better control over API access infrastructure, and rate limiting. So it’s something that we're exploring doing quite differently.

Unit21’s public APIs are RESTful, Can you talk about why you decided to offer Restful APIs over say, GraphQL?

I think that designing an API is very similar to building any other product that's customer facing, right? You just need to talk to users. Ask yourself what would minimize the friction between using you versus a competitor for example. You should always aim to build something that is useful and usable to customers. For Unit21 specifically, the decision to choose REST was shaped by our early customers, many of whom were the initial design partners for the API. We picked REST because it allowed us to fulfill all of what the customers needed, and gave them a friendly interface to interact with.

I've been in companies before where GraphQL was the design scheme for the public facing APIs. And in those cases the main consumer persona of the APIs were already somewhat acclimatized to querying through GraphQL. Asking people that haven't used GraphQL to start consuming a GraphQL API is a pretty big shift. It's a steep learning curve, so you need to be really thoughtful if you’re considering that.

Also, you’ve recently released webhooks in beta, what was the impetus for offering webhooks, and has the customer response been positive?

That's right. Actually, we’ve had webhooks around for a while, but they hadn't always been consistent with our API endpoints. We recently revamped the whole thing to make everything consistent, and that’s why it’s termed as being ‘beta’. Consistency was important to achieve because, at the end of the day, it's the same customer teams that are dealing with webhooks as with the push/pull APIs. We wanted to make sure the developer experience was consistent. And since we made the shift to consistency, the reaction has been great, customers were very happy with the change.

Developer Experience

What do you think constitutes a good developer experience?

Oof this is a broad question, but a good one. All of us have struggled with API Docs before; struggled with trying to relate the concept of what you're trying to do with what the API allows you to do. Of course, there are some really good examples of how documentation can be a great assist between what is intuitive and unintuitive. But I think that a really good developer experience is when the set of APIs maps closely to the intention of the majority of the users using the API, so that you don't have to rely on documentation to achieve most of what you're trying to do.

There are some APIs like this that I've worked with before that have tremendously intuitive interfaces. In those cases, you really only need to look at documentation for exceptions or to check that what you're doing is seen. And I think those APIs are clearly the result of developers with a good understanding of not only the problem you're trying to solve, but also the type of developers who will be using the API.

Closing

A closing question we like to ask everyone: any new technologies or tools that you’re particularly excited by? Doesn’t have to be API related.

Yeah, I think there's a bunch of really interesting developments within the datastream space. This is very near and dear to what we're doing at Unit21. A lot of the value of our company is undergirded by the quantity and quality of the data we can ingest from customers.

We're currently going through a data architecture, implementing the next generation of what data storage and access looks like in our systems, and there's a set of interesting concepts around what is a datastream versus what is a database. And I think we first started seeing this become a common concept with K sequel, in confluent, Kafka tables etc. But now with concepts like rocks set with, snowflake and databricks all releasing products that allow you to think of data flowing into your system as both a stream and a data set. I think this duality allows for much more flexibility. It’s a very powerful concept, because you no longer have to think of data as flowing into one place as either, but it could be both supporting multiple different types of use cases without sacrificing too much of performance or storage.